spy & security

and I don’t mean to make the ingestion for the moment that he was guilbey of gulpable gluttony as regards chew-able boltaballs

The best-known member of Elon Musk’s U.S. DOGE Service team of technologists once provided support to a cybercrime gang that bragged about trafficking in stolen data and cyberstalking an FBI agent, according to digital records reviewed by Reuters.

{ Reuters | Continue reading }

Life in the state of nature was less violent than you might think. Most of our ancestors avoided conflict. But this made them vulnerable to a few psychopaths.

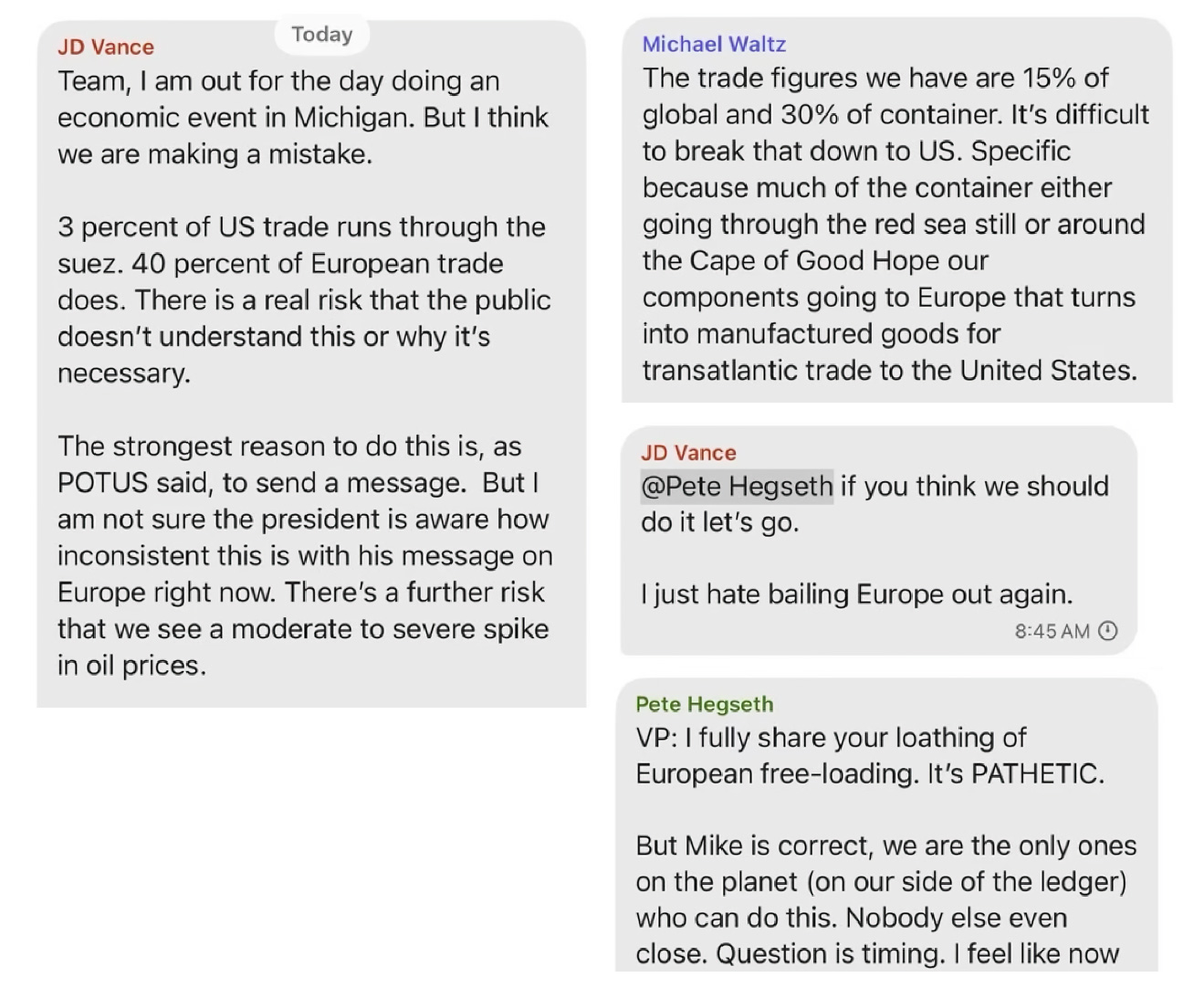

related { … Hegseth. He has released information that could have directly led to the death of an American fighter pilot. […] “he’s doing performative activities. He’s not yet demonstrated that he’s running the department.” | NY Times | It is an uncomfortable episode for the new defense secretary, who has vowed to hold senior military leaders accountable for mistakes. | Washington Post }

He loves us enough to allow us to be hurt in the short-term if it leads to our salvation in the long run

Cybercrime is already a huge, multi-trillion dollar problem, and one that most victims don’t like to talk about. It is said to be bigger than the entire global drug trade. Four things could make it much worse in 2025.

First, generative AI, rising in popularity and declining in price, is a perfect tool for cyberattackers. Although it is unreliable and prone to hallucinations, it is terrific at making plausible sounding text (e.g., phishing attacks to trick people into revealing credentials) and deepfaked videos at virtually zero cost, allowing attackers to broaden their attacks.

Second, large language models are notoriously susceptible to jailbreaking and things like “prompt-injection attacks,” for which no known solution exists.

Third, generative AI tools are increasingly being used to create code; in some cases those coders don’t fully understand the code written, and the autogenerated code has already been shown in some cases to introduce new security holes.

Finally, in the midst of all this, the new U.S. administration seems determined to deregulate as much as possible, slashing costs and even publicly shaming employees. Federal employees who do their jobs may be frightened, and many will be tempted to look elsewhere; enforcement and investigations will almost certainly decline in both quality and quantity, leaving the world quite vulnerable to ever more audacious attacks.

related { 2025 deepfake threat predictions from biometrics, cybersecurity insiders }

Shaken, not stirred

One instructor at the CIA came up with an ingenious way to use the Starbucks gift card as a signaling tool instead of the traditional chalk marks and lowered window blinds.

He gives one [gift card] to each of his assets and tells them, “If you need to see me, buy a coffee.” Then he checks the card numbers on a cybercafé computer each day, and if the balance on one is depleted, he knows he’s got a meeting. Saves him having to drive past a whole slew of different physical signal sites each day [to check for chalk marks and lowered window blinds]. And the card numbers aren’t tied to identities, so the whole thing is pretty secure.

Next time, you might not want to wear bright blue. It means the stag can see you.

At a time when digital media is deepening social divides in Western democracies, China is manipulating online discourse to enforce the Communist Party’s consensus. To stage-manage what appeared on the Chinese internet early this year, the authorities issued strict commands on the content and tone of news coverage, directed paid trolls to inundate social media with party-line blather and deployed security forces to muzzle unsanctioned voices. […]

Researchers have estimated that hundreds of thousands of people in China work part-time to post comments and share content that reinforces state ideology. Many of them are low-level employees at government departments and party organizations. Universities have recruited students and teachers for the task. Local governments have held training sessions for them. […]

Local officials turned to informants and trolls to control opinion […] “Mobilized the force of more than 1,500 cybersoldiers across the district to promptly report information about public opinion in WeChat groups and other semiprivate chat circles.”

related/audio { The Chinese Surveillance State | Part 1, Part 2 }

‘Blackmail is more effective than bribery.’ –John le Carré

For 6 months of 2020, I’ve been working on […] a wormable radio-proximity exploit which allows me to gain complete control over any iPhone in my vicinity. View all the photos, read all the email, copy all the private messages and monitor everything which happens on there in real-time.

Sometimes it feels like no one sees the good things you do. Like you’re just alone.

U.S. government agencies from the military to law enforcement have been buying up mobile-phone data from the private sector to use in gathering intelligence, monitoring adversaries and apprehending criminals. Now, the U.S. Air Force is experimenting with the next step.

SignalFrame’s product can turn civilian smartphones into listening devices—also known as sniffers—that detect wireless signals from any device that happens to be nearby. The company, in its marketing materials, claims to be able to distinguish a Fitbit from a Tesla from a home-security device, recording when and where those devices appear in the physical world.

Using the SignalFrame technology, “one device can walk into a bar and see all other devices in that place,” said one person who heard a pitch for the SignalFrame product at a marketing industry event. […]

Data collection of this type works only on phones running the Android operating system made by Alphabet Inc.’s Google, according to Joel Reardon, a computer science professor at the University of Calgary. Apple Inc. doesn’t allow third parties to get similar access on its iPhone line.

photo { William Eggleston, Untitled (Greenwood, Mississippi), 2001 }

‘Le sage arrose doucement, l’insensé tout de suite inonde.’ –Florian

The group, known to researchers as “Dragonfly” or “Energetic Bear” for its hackings of the energy sector, was not involved in 2016 election hacking. But it has in the past five years breached the power grid, water treatment facilities and even nuclear power plants, including one in Kansas.

It also hacked into Wi-Fi systems at San Francisco International Airport and at least two other West Coast airports in March in an apparent bid to find one unidentified traveler, a demonstration of the hackers’ power and resolve.

September’s intrusions marked the first time that researchers caught the group, a unit of Russia’s Federal Security Service, or F.S.B., targeting states and counties. The timing of the attacks so close to the election and the potential for disruption set off concern inside private security firms, law enforcement and intelligence agencies. […]

“This appears to be preparatory, to ensure access when they decide they need it,” […] Energetic Bear typically casts a wide net, then zeros in on a few high-value targets. […] They could take steps like pulling offline the databases that verify voters’ signatures on mail-in ballots, or given their particular expertise, shutting power to key precincts. […]

Officials at San Francisco International Airport discovered Russia’s state hackers had breached the online system that airport employees and travelers used to gain access to the airport’s Wi-Fi. The hackers injected code into two Wi-Fi portals that stole visitors’ user names, cracked their passwords and infected their laptops. The attack began on March 17 and continued for nearly two weeks until it was shut down. […] As pervasive as the attacks could have been, researchers believe Russia’s hackers were interested only in one specific person traveling through the airports that day.

And she lit up and fireland was ablaze

A range of methods have been applied for user authentication on smartphones and smart watches, such as password, PIN and fingerprint. […] In this paper, a new biometric trait, finger snapping, is applied for person authentication.

Finger snapping is an act of making an impulsive sound with one’s fingers and palm. It is often done by connecting the thumb with another (middle, index or ring) finger, and then moving the other finger immediately downward to hit the palm. Such act of finger snapping involves physiological characteristics which refer to inherited traits that are related to human body, as the sound of finger snapping is differentiated by the size of palm and skin texture. In addition, it also involves behavioral characteristics which refer to learned pattern of a person, as it is the movement of the finger creates the sound.

A survey is carried out on 74 people about whether they can snap their fingers and accept the finger snapping authentication. Results show that 86.5 % of the respondents can snap fingers, of which 89.2 % would like to authenticate themselves using a simple finger snap. Besides, through our finger snapping collecting phase, we come to find out that people who could not snap their fingers can learn to do it after understanding the method of finger snapping.

previously { Silicon Valley Legends Launch Beyond Identity in Quest to Eliminate Passwords }

photo { Guen Fiore }

Had I no eyes but ears, my ears would love

The Federal Bureau of Investigation is issuing this announcement to encourage Americans to exercise caution when using hotel wireless networks (Wi-Fi) for telework. FBI has observed a trend where individuals who were previously teleworking from home are beginning to telework from hotels. US hotels, predominantly in major cities, have begun to advertise daytime room reservations for guests seeking a quiet, distraction-free work environment. While this option may be appealing, accessing sensitive information from hotel Wi-Fi poses an increased security risk over home Wi-Fi networks. Malicious actors can exploit inconsistent or lax hotel Wi-Fi security and guests’ security complacency to compromise the work and personal data of hotel guests. Following good cyber security practices can minimize some of the risks associated with using hotel Wi-Fi for telework.

Attackers target hotels to obtain records of guest names, personal information, and credit card numbers. The hotel environment involves many unaffiliated guests, operating in a confined area, and all using the same wireless network. Guests are largely unable to control, verify, or monitor network security. Cyber criminals can take advantage of this environment to monitor a victim’s internet browsing or redirect victims to false login pages. Criminals can also conduct an “evil twin attack” by creating their own malicious network with a similar name to the hotel’s network. Guests may then mistakenly connect to the criminal’s network instead of the hotel’s, giving the criminal direct access to the guest’s computer. […]

Once the malicious actor gains access to the business network, they can steal proprietary data and upload malware, including ransomware. Cybercriminals or nation-state actors can use stolen intellectual property to facilitate their own schemes or produce counterfeit versions of proprietary products. Cybercriminals can use information gathered from access to company data to trick business executives into transferring company funds to the criminal.

ink on black paper { Aura Satz, Tone Transmission, 2020 }

‘And the state of (gestures at everything) *this* is not helping lol.’ –britney gil

Some luxury brands have started adding surveillance to their arsenal, turning to blockchains to undermine the emergence of secondary markets in a way that pays lip service to sustainability and labor ethics concerns. LVMH launched Aura in 2019, a blockchain-enabled platform for authenticating products from the Louis Vuitton, Christian Dior, Marc Jacobs, and Fenty brands, among others. Meanwhile, fashion label Stella McCartney began a transparency and data-monitoring partnership with Google for tracking garment provenance, discouraging fakes and promising to ensure the ethical integrity of supply chains. Elsewhere, a host of fashion blockchain startups, including Loomia, Vechain, and Faizod, have emerged, offering tracking technologies to assuage customer concerns over poor labor conditions and manufacturing-related pollution by providing transparency on precisely where products are made and by which subcontractors. […]

Companies such as Arianee, Dentsu and Evrythng also aim to track clothes on consumers’ bodies and in their closets. At the forefront of this trend is Eon, which with backing from Microsoft and buy-in from mainstream fashion brands such as H&M and Target, has begun rolling out the embedding of small, unobtrusive RFID tags — currently used for everything from tracking inventory to runners on a marathon course — in garments designed to transmit data without human intervention. […]

According to the future depicted by Eon and its partners, garments would become datafied brand assets administering access to surveillance-enabled services, benefits, and experiences. The people who put on these clothes would become “users” rather than wearers. In some respects, this would simply extend some of the functionality of niche wearables to garments in general. Think: swimsuits able to detect UV light and prevent overexposure to the sun, yoga pants that prompt the wearer to hold the right pose, socks that monitor for disease risks, and fitness trackers embedded into sports shirts. […]

According to one potential scenario outlined by Eon partners, a running shoe could send a stream of usage data to the manufacturer so that it could notify the consumer when the shoe “nears the end of its life.” In another, sensors would determine when a garment needs repairing and trigger an online auction among competing menders. Finally, according to another, sensors syncing with smart mirrors would offer style advice and personalized advertising.

This is Rooshious balls. This is a ttrinch. This is mistletropes. This is Canon Futter with the popynose.

For more than half a century, governments all over the world trusted a single company to keep the communications of their spies, soldiers and diplomats secret.

The company, Crypto AG, got its first break with a contract to build code-making machines for U.S. troops during World War II. Flush with cash, it became a dominant maker of encryption devices for decades, navigating waves of technology from mechanical gears to electronic circuits and, finally, silicon chips and software.

The Swiss firm made millions of dollars selling equipment to more than 120 countries well into the 21st century. Its clients included Iran, military juntas in Latin America, nuclear rivals India and Pakistan, and even the Vatican.

But what none of its customers ever knew was that Crypto AG was secretly owned by the CIA in a highly classified partnership with West German intelligence. These spy agencies rigged the company’s devices so they could easily break the codes that countries used to send encrypted messages. […]

“It was the intelligence coup of the century,” the CIA report concludes. […]

The program had limits. America’s main adversaries, including the Soviet Union and China, were never Crypto customers.

related { How Big Companies Spy on Your Emails }

Everywhere erriff you went and every bung you arver dropped into, in cit or suburb or in addled areas, the Rose and Bottle or Phoenix Tavern or Power’s Inn or Jude’s Hotel or wherever you scoured the countryside from Nannywater to Vartryville or from Porta Lateen to the lootin quarter

On Monday, the Justice Department announced that it was charging four members of China’s People’s Liberation Army with the 2017 Equifax breach that resulted in the theft of personal data of about 145 million Americans.

Using the personal data of millions of Americans against their will is certainly alarming. But what’s the difference between the Chinese government stealing all that information and a data broker amassing it legally without user consent and selling it on the open market? Both are predatory practices to invade privacy for insights and strategic leverage. […]

Equifax is eager to play the hapless victim in all this. […] “The attack on Equifax was an attack on U.S. consumers as well as the United States,” [Equifax’s chief executive] said. […]

According to a 2019 class-action lawsuit, the company’s cybersecurity practices were a nightmare. The suit alleged that “sensitive personal information relating to hundreds of millions of Americans was not encrypted, but instead was stored in plain text” and “was accessible through a public-facing, widely used website.” Another example of the company’s weak safeguards, according to the suit, shows the company struggling to use a competent password system. “Equifax employed the username ‘admin’ and the password ‘admin’ to protect a portal used to manage credit disputes,” it read.

Though the attack was quite sophisticated — the hackers sneaked out information in small, hard to detect chunks and routed internet traffic through 34 servers in over a dozen countries to cover their tracks — Equifax’s apparent carelessness made it a perfect target.

According to a 2019 class-action lawsuit, the company’s cybersecurity practices were a nightmare. The suit alleged that “sensitive personal information relating to hundreds of millions of Americans was not encrypted, but instead was stored in plain text” and “was accessible through a public-facing, widely used website.” Another example of the company’s weak safeguards, according to the suit, shows the company struggling to use a competent password system. “Equifax employed the username ‘admin’ and the password ‘admin’ to protect a portal used to manage credit disputes,” it read.

The takeaway: While almost anything digital is at some risk of being hacked, the Equifax attack was largely preventable.

related { The End of Privacy as We Know It? }

related { The FBI downloaded CIA’s hacking tools using Starbuck’s WiFi }

The Mookse had a sound eyes right but he could not all hear

Now we learn that San Diego City Attorney Mara Elliott gave the approval to General Electric to outfit 4,000 new “smart street lights” with cameras and microphones in 2017. […]

The City paid $30 million for the contract. But the larger issue is that General Electric has already made more than $1 billion dollars selling San Diego residents’ data to Wall Street.

The City of San Diego gave what appears to be unrestricted rights to the private data, according to the contract. […]

San Diego is now home to the largest mass surveillance operation across the country.

General Electric and its subsidiaries* have access to all the processed data in perpetuity with no oversight.

photo { Brad Rimmer }

‘The way in which the other presents himself, exceeding the idea of the other in me, we here name face.’ –Emmanuel Levinas

His tiny company, Clearview AI, devised a groundbreaking facial recognition app. You take a picture of a person, upload it and get to see public photos of that person, along with links to where those photos appeared. The system — whose backbone is a database of more than three billion images that Clearview claims to have scraped from Facebook, YouTube, Venmo and millions of other websites — goes far beyond anything ever constructed by the United States government or Silicon Valley giants. […]

Tech companies capable of releasing such a tool have refrained from doing so; in 2011, Google’s chairman at the time said it was the one technology the company had held back because it could be used “in a very bad way.” Some large cities, including San Francisco, have barred police from using facial recognition technology.

But without public scrutiny, more than 600 law enforcement agencies have started using Clearview in the past year, according to the company, which declined to provide a list. The computer code underlying its app, analyzed by The New York Times, includes programming language to pair it with augmented-reality glasses; users would potentially be able to identify every person they saw. The tool could identify activists at a protest or an attractive stranger on the subway, revealing not just their names but where they lived, what they did and whom they knew.

And it’s not just law enforcement: Clearview has also licensed the app to at least a handful of companies for security purposes. […]

In addition to Mr. Ton-That, Clearview was founded by Richard Schwartz — who was an aide to Rudolph W. Giuliani when he was mayor of New York — and backed financially by Peter Thiel, a venture capitalist behind Facebook and Palantir.

Is there ever a day that mattresses are not on sale?

The prospect of data-driven ads, linked to expressed preferences by identifiable people, proved in this past decade to be irresistible. From 2010 through 2019, revenue for Facebook has gone from just under $2 billion to $66.5 billion per year, almost all from advertising. Google’s revenue rose from just under $25 billion in 2010 to just over $155 billion in 2019. Neither company’s growth seems in danger of abating.

The damage to a healthy public sphere has been devastating. All that ad money now going to Facebook and Google once found its way to, say, Conde Nast, News Corporation, the Sydney Morning Herald, NBC, the Washington Post, El País, or the Buffalo Evening News. In 2019, more ad revenue flowed to targeted digital ads in the U.S. than radio, television, cable, magazine, and newspaper ads combined for the first time. It won’t be the last time. Not coincidentally, journalists are losing their jobs at a rate not seen since the Great Recession.

Meanwhile, there is growing concern that this sort of precise ad targeting might not work as well as advertisers have assumed. Right now my Facebook page has ads for some products I would not possibly ever desire.

{ Slate | Continue reading | Thanks Tim }

typography can save the world just kidding

Google is engaged with one of the country’s largest health-care systems to collect and crunch the detailed personal health information of millions of Americans across 21 states.

The initiative, code-named “Project Nightingale,” appears to be the biggest in a series of efforts by Silicon Valley giants to gain access to personal health data and establish a toehold in the massive health-care industry. […] Google began the effort in secret last year with St. Louis-based Ascension, the second-largest health system in the U.S., with the data sharing accelerating since summer, the documents show.

The data involved in Project Nightingale encompasses lab results, doctor diagnoses and hospitalization records, among other categories, and amounts to a complete health history, including patient names and dates of birth.

Neither patients nor doctors have been notified. At least 150 Google employees already have access to much of the data on tens of millions of patients, according to a person familiar with the matter and documents.

Some Ascension employees have raised questions about the way the data is being collected and shared, both from a technological and ethical perspective, according to the people familiar with the project. But privacy experts said it appeared to be permissible under federal law. That law, the Health Insurance Portability and Accountability Act of 1996, generally allows hospitals to share data with business partners without telling patients, as long as the information is used “only to help the covered entity carry out its health care functions.”

Google in this case is using the data, in part, to design new software, underpinned by advanced artificial intelligence and machine learning, that zeroes in on individual patients to suggest changes to their care.

oil on panel { Mark Ryden, Incarnation, 2009 | Work in progress of the intricate frame for Mark Ryden’s painting Incarnation }

Dave, although you took very thorough precautions in the pod against my hearing you, I could see your lips move

Founded in 2004 by Peter Thiel and some fellow PayPal alumni, Palantir cut its teeth working for the Pentagon and the CIA in Afghanistan and Iraq. The company’s engineers and products don’t do any spying themselves; they’re more like a spy’s brain, collecting and analyzing information that’s fed in from the hands, eyes, nose, and ears. The software combs through disparate data sources—financial documents, airline reservations, cellphone records, social media postings—and searches for connections that human analysts might miss. It then presents the linkages in colorful, easy-to-interpret graphics that look like spider webs. U.S. spies and special forces loved it immediately; they deployed Palantir to synthesize and sort the blizzard of battlefield intelligence. It helped planners avoid roadside bombs, track insurgents for assassination, even hunt down Osama bin Laden. The military success led to federal contracts on the civilian side. The U.S. Department of Health and Human Services uses Palantir to detect Medicare fraud. The FBI uses it in criminal probes. The Department of Homeland Security deploys it to screen air travelers and keep tabs on immigrants.

Police and sheriff’s departments in New York, New Orleans, Chicago, and Los Angeles have also used it, frequently ensnaring in the digital dragnet people who aren’t suspected of committing any crime. People and objects pop up on the Palantir screen inside boxes connected to other boxes by radiating lines labeled with the relationship: “Colleague of,” “Lives with,” “Operator of [cell number],” “Owner of [vehicle],” “Sibling of,” even “Lover of.” If the authorities have a picture, the rest is easy. Tapping databases of driver’s license and ID photos, law enforcement agencies can now identify more than half the population of U.S. adults. […]

In March a former computer engineer for Cambridge Analytica, the political consulting firm that worked for Donald Trump’s 2016 presidential campaign, testified in the British Parliament that a Palantir employee had helped Cambridge Analytica use the personal data of up to 87 million Facebook users to develop psychographic profiles of individual voters. […] The employee, Palantir said, worked with Cambridge Analytica on his own time. […]

Legend has it that Stephen Cohen, one of Thiel’s co-founders, programmed the initial prototype for Palantir’s software in two weeks. It took years, however, to coax customers away from the longtime leader in the intelligence analytics market, a software company called I2 Inc.

In one adventure missing from the glowing accounts of Palantir’s early rise, I2 accused Palantir of misappropriating its intellectual property through a Florida shell company registered to the family of a Palantir executive. A company claiming to be a private eye firm had been licensing I2 software and development tools and spiriting them to Palantir for more than four years. I2 said the cutout was registered to the family of Shyam Sankar, Palantir’s director of business development.

I2 sued Palantir in federal court, alleging fraud, conspiracy, and copyright infringement. […] Palantir agreed to pay I2 about $10 million to settle the suit. […]

Sankar, Palantir employee No. 13 and now one of the company’s top executives, also showed up in another Palantir scandal: the company’s 2010 proposal for the U.S. Chamber of Commerce to run a secret sabotage campaign against the group’s liberal opponents. Hacked emails released by the group Anonymous indicated that Palantir and two other defense contractors pitched outside lawyers for the organization on a plan to snoop on the families of progressive activists, create fake identities to infiltrate left-leaning groups, scrape social media with bots, and plant false information with liberal groups to subsequently discredit them.

After the emails emerged in the press, Palantir offered an explanation similar to the one it provided in March for its U.K.-based employee’s assistance to Cambridge Analytica: It was the work of a single rogue employee.