During the winter of 2007, a UCLA professor of psychiatry named Gary Small recruited six volunteers—three experienced Web surfers and three novices—for a study on brain activity. He gave each a pair of goggles onto which Web pages could be projected. Then he slid his subjects, one by one, into the cylinder of a whole-brain magnetic resonance imager and told them to start searching the Internet. As they used a handheld keypad to Google various preselected topics—the nutritional benefits of chocolate, vacationing in the Galapagos Islands, buying a new car—the MRI scanned their brains for areas of high activation, indicated by increases in blood flow.

The two groups showed marked differences. Brain activity of the experienced surfers was far more extensive than that of the newbies, particularly in areas of the prefrontal cortex associated with problem-solving and decisionmaking. Small then had his subjects read normal blocks of text projected onto their goggles; in this case, scans revealed no significant difference in areas of brain activation between the two groups. The evidence suggested, then, that the distinctive neural pathways of experienced Web users had developed because of their Internet use.

The most remarkable result of the experiment emerged when Small repeated the tests six days later. In the interim, the novices had agreed to spend an hour a day online, searching the Internet. The new scans revealed that their brain activity had changed dramatically; it now resembled that of the veteran surfers. “Five hours on the Internet and the naive subjects had already rewired their brains,” Small wrote. He later repeated all the tests with 18 more volunteers and got the same results.

When first publicized, the findings were greeted with cheers. By keeping lots of brain cells buzzing, Google seemed to be making people smarter. But as Small was careful to point out, more brain activity is not necessarily better brain activity. The real revelation was how quickly and extensively Internet use reroutes people’s neural pathways. “The current explosion of digital technology not only is changing the way we live and communicate,” Small concluded, “but is rapidly and profoundly altering our brains.”

What kind of brain is the Web giving us? That question will no doubt be the subject of a great deal of research in the years ahead. Already, though, there is much we know or can surmise—and the news is quite disturbing. Dozens of studies by psychologists, neurobiologists, and educators point to the same conclusion: When we go online, we enter an environment that promotes cursory reading, hurried and distracted thinking, and superficial learning. Even as the Internet grants us easy access to vast amounts of information, it is turning us into shallower thinkers, literally changing the structure of our brain. (…)

What we’re experiencing is, in a metaphorical sense, a reversal of the early trajectory of civilization: We are evolving from cultivators of personal knowledge into hunters and gatherers in the electronic data forest. In the process, we seem fated to sacrifice much of what makes our minds so interesting.

{ Nicholas Carr/Wired | Continue reading }

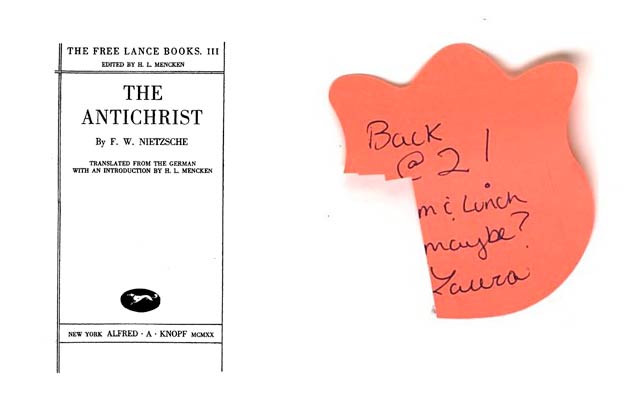

In an ideal world, I would sit down at my computer, do my work, and that would be that. In this world, I get entangled in surfing and an hour disappears. (…)

For years I would read during breakfast, the coffee stirring my pleasure in the prose. You can’t surf during breakfast. Well, maybe you can. Now I don’t have coffee and I don’t eat breakfast. I get up and check my e-mail, blog comments and Twitter.

{ Roger Ebert/Chicago Sun-Times }