Tech bubbles come in two varieties: The ones that leave something behind, and the ones that leave nothing behind. […]

cryptocurrency/NFTs, or the complex financial derivatives that led up to the 2008 financial crisis. These crises left behind very little reusable residue. […]

WorldCom was a gigantic fraud and it kicked off a fiber-optic bubble, but when WorldCom cratered, it left behind a lot of fiber that’s either in use today or waiting to be lit up. On balance, the world would have been better off without the WorldCom fraud, but at least something could be salvaged from the wreckage.

That’s unlike, say, the Enron scam or the Uber scam, both of which left the world worse off than they found it in every way. Uber burned $31 billion in investor cash, mostly from the Saudi royal family, to create the illusion of a viable business. Not only did that fraud end up screwing over the retail investors who made the Saudis and the other early investors a pile of money after the company’s IPO – but it also destroyed the legitimate taxi business and convinced cities all over the world to starve their transit systems of investment because Uber seemed so much cheaper. Uber continues to hemorrhage money, resorting to cheap accounting tricks to make it seem like they’re finally turning it around, even as they double the price of rides and halve driver pay (and still lose money on every ride). The market can remain irrational longer than any of us can stay solvent, but when Uber runs out of suckers, it will go the way of other pump-and-dumps like WeWork.

What kind of bubble is AI? […]

Accountants might value an AI tool’s ability to draft a tax return. Radiologists might value the AI’s guess about whether an X-ray suggests a cancerous mass. But with AIs’ tendency to “hallucinate” and confabulate, there’s an increasing recognition that these AI judgments require a “human in the loop” to carefully review their judgments. In other words, an AI-supported radiologist should spend exactly the same amount of time considering your X-ray, and then see if the AI agrees with their judgment, and, if not, they should take a closer look. AI should make radiology more expensive, in order to make it more accurate. […]

Cruise, the “self-driving car” startup that was just forced to pull its cars off the streets of San Francisco, pays 1.5 staffers to supervise every car on the road. In other words, their AI replaces a single low-waged driver with 1.5 more expensive remote supervisors – and their cars still kill people. […]

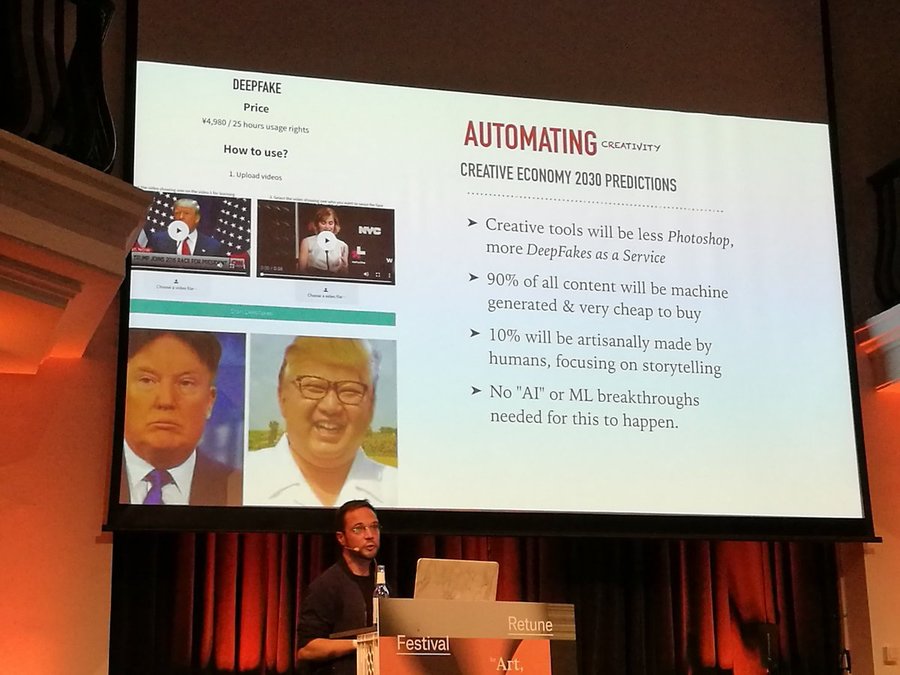

Just take one step back and look at the hype through this lens. All the big, exciting uses for AI are either low-dollar (helping kids cheat on their homework, generating stock art for bottom-feeding publications) or high-stakes and fault-intolerant (self-driving cars, radiology, hiring, etc.).

{ Locus/Cory Doctorow | Continue reading }