brain

The prevalence of depression among those with migraine is approximately twice as high as for those without the disease (men: 8.4% vs. 3.4%; women 12.4% vs. 5.7%), according to a new study published by University of Toronto researchers. […]

Consistent with prior research, the prevalence of migraines was much higher in women than men, with one in every seven women, compared to one in every 16 men, reporting that they had migraines.

{ University of Toronto | Continue reading }

Being ostracized or spurned is just like slamming your hand in a door. To the brain, pain is pain, whether it’s social or physical.

{ Bloomberg Businessweek | Continue reading }

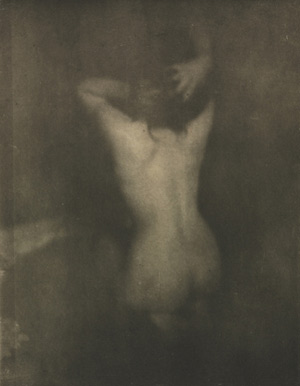

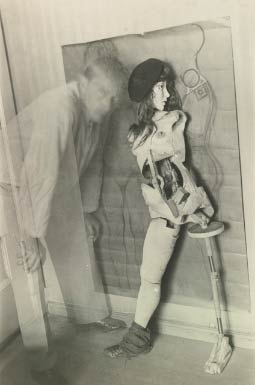

photo { Edward Steichen, Dolor, 1903 }

brain, neurosciences | October 18th, 2013 12:22 pm

“Brain training makes you more intelligent.” – WRONG

There are two forms of activity that must be distinguished: working memory capacity and general fluid intelligence. The first one refers to the ability to keep information in mind or easily retrievable, particularly when we are performing various tasks simultaneously. The second implies the ability to do complex reasoning and solve problems. Most brain trainings are only directed at the first one: the working memory capacity. So yes, you can and should use these games or apps to improve your mental capacity of memorizing. But that won’t make you any better at solving math problems.

{ United Academics | Continue reading }

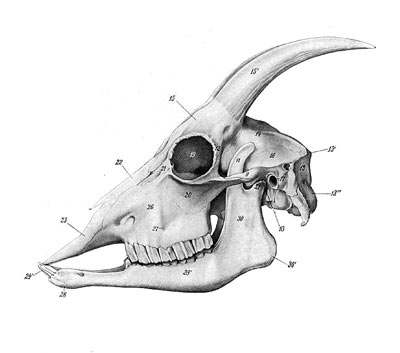

images { Matt Bower }

brain, guide | October 15th, 2013 11:24 am

It has been conjectured that individuals may be left-brain dominant or right-brain dominant based on personality and cognitive style, but neuroimaging data has not provided clear evidence whether such phenotypic differences in the strength of left-dominant or right-dominant networks exist.

{ Thoughts on Thoughts | Continue reading }

images { Bernhard Handick | 2 }

brain | August 24th, 2013 2:19 pm

Many scholars have argued that Nietzsche’s dementia was caused by syphilis. A careful review of the evidence suggests that this consensus is probably incorrect. The syphilis hypothesis is not compatible with most of the evidence available. Other hypotheses – such as slowly growing right-sided retro-orbital meningioma – provide a more plausible fit to the evidence.

{ Journal of Medical Biography | PDF }

From his late 20s onward, Nietzsche experienced severe, generally right- sided headaches. He concurrently suffered a progressive loss of vision in his right eye and developed cranial nerve findings that were documented on neurological examinations in addition to a disconjugate gaze evident in photographs. His neurological findings are consistent with a right-sided frontotemporal mass. In 1889, Nietzsche also developed a new-onset mania which was followed by a dense abulia, also consistent with a large frontal tumor. […] An intracranial mass may have been the etiology of his headaches and neurological findings and the cause of his ultimate mental collapse in 1889.

{ Neurosurgery | PDF }

brain, health, nietzsche, science | August 18th, 2013 11:01 am

When we recognize someone, we integrate information from across their face into a perceptual whole, and do so using a specialized brain region. Recognizing other kinds of objects does not engage such specific brain areas, and is achieved in a much more parts-based way.

In a recent review of the literature [The evolution of holistic processing of faces], we investigated how this face-specific mode of perception may have evolved by examining the evidence for face-based holistic processing in other species. A surprisingly wide variety of other animals can recognize each other from their “face,” but for most of these there is either evidence that they don’t do this “holistically” (dogs are an example) or insufficient evidence to claim that they do (typically because the experiments are poorly designed).

There is good evidence that some species of monkey are as affected by turning the face upside down as humans are (which is one index of holistic processing), and one species of monkey (Rhesus macaques) also shows evidence of the “composite effect.” The composite effect refers to the fact that people find it difficult to recognize the top half of a face if it is shown lined up with the bottom half of a different face, because we can’t help integrating the two halves into a new whole. People have trouble recognizing other primate faces when they are upside down, but only show the composite effect for human faces.

{ University of Newcastle | Continue reading }

brain, faces, science | April 21st, 2013 3:44 pm

“GB” is a 28 year old man with a curious condition: his optic nerves are in the wrong place.

Most people have an optic chiasm, a crossroads where half of the signals from each eye cross over the midline, in such a way that each half of the brain gets information from one side of space. GB, however, was born with achiasma – the absence of this crossover. It’s an extremely rare disorder in humans, although it’s more common in some breeds of animals, such as Belgian sheepdogs. […]

In the absence of a left-right crossover, all of the signals from GB’s left eye end up in his left visual cortex, and vice versa. But the question was, how does the brain make sense of it? Normally, remember, each half of the cortex corresponds to half our visual field. But in GB’s brain, each half has to cope with the whole visual field – twice as much space (even though it’s getting no more signals than normal.)

{ Neuroskeptic | Continue reading }

brain, eyes | April 15th, 2013 6:00 am

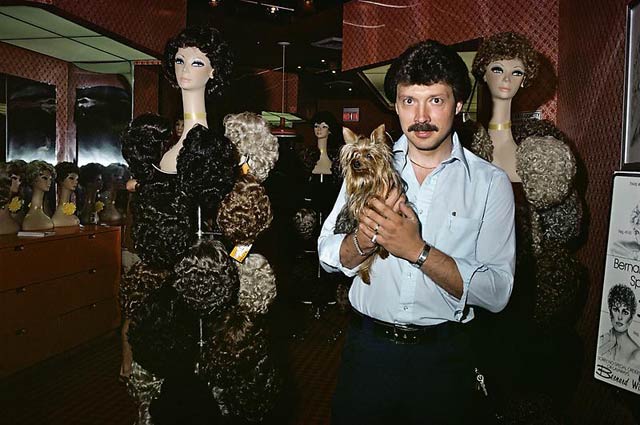

brain, photogs | April 5th, 2013 12:00 pm

Gerald Crabtree, a neurobiologist at Stanford University, states that intelligent human behavior requires between 2,000 and 5,000 genes to work together. A mutation or other fault in any of these genes, and some kind of intellectual deficiency results. Before the creation of complex societies, humans suffering from these mutations would have died. But modern societies may have allowed the more intelligent to care for the less intelligent. While anyone who had participated in a group project understands this phenomena, it doesn’t explain why IQ and other tests have consistently risen, and why people with high scores on those tests still do stupid things.

{ United Academics | Continue reading }

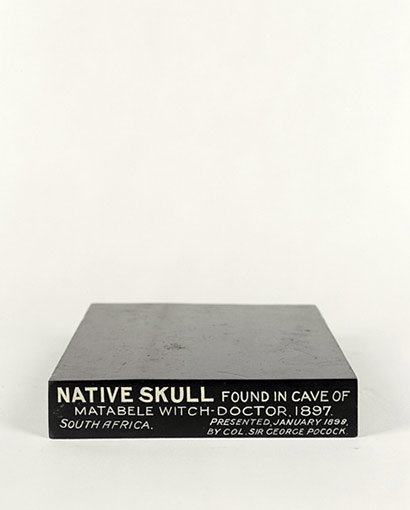

photo { via Bill Sullivan }

brain, psychology | April 4th, 2013 12:51 pm

All seems to indicate that the next decade, the 20s, will be the magic decade of the brain, with amazing science but also amazing applications. With the development of nanoscale neural probes and high speed, two-way Brain-Computer interfaces (BCI), by the end of the next decade we may have our iPhones implanted in our brains and become a telepathic species. […]

Last month the New York Times revealed that the Obama Administration may soon seek billions of dollars from Congress for a Brain Activity Map (BAM) project. […] The project may be partly based on the paper “The Brain Activity Map Project and the Challenge of Functional Connectomics” (Neuron, June 2012) by six well-known neuroscientists. […]

A new paper “The Brain Activity Map” (Science, March 2013), written as an executive summary by the same six neuroscientists and five more, is more explicit: “The Brain Activity Map (BAM), could put neuroscientists in a position to understand how the brain produces perception, action, memories, thoughts, and consciousness… Within 5 years, it should be possible to monitor and/or to control tens of thousands of neurons, and by year 10 that number will increase at least 10-fold. By year 15, observing 1 million neurons with markedly reduced invasiveness should be possible. With 1 million neurons, scientists will be able to evaluate the function of the entire brain of the zebrafish or several areas from the cerebral cortex of the mouse. In parallel, we envision developing nanoscale neural probes that can locally acquire, process, and store accumulated data. Networks of “intelligent” nanosystems would be capable of providing specific responses to externally applied signals, or to their own readings of brain activity.”

{ IEET | Continue reading }

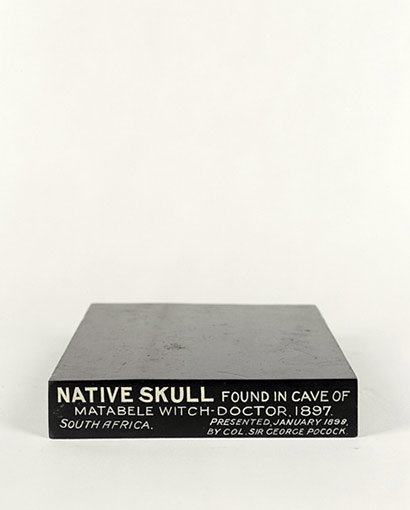

photo { Adam Broomberg & Oliver Chanarin }

brain, future, neurosciences, technology | March 18th, 2013 12:13 pm

The flip of a single molecular switch helps create the mature neuronal connections that allow the brain to bridge the gap between adolescent impressionability and adult stability. Now Yale School of Medicine researchers have reversed the process, recreating a youthful brain that facilitated both learning and healing in the adult mouse.

{ EurekAlert | Continue reading }

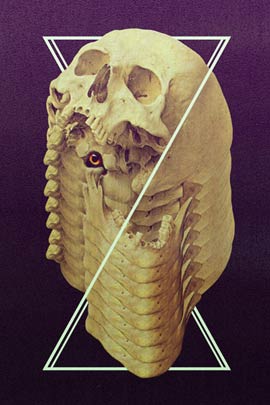

images { 1 | 2 }

brain, science | March 6th, 2013 11:57 am

Hunger, thirst, stress and drugs can create a change in the brain that transforms a repulsive feeling into a strong positive “wanting,” a new University of Michigan study indicates.

The research used salt appetite to show how powerful natural mechanisms of brain desires can instantly transform a cue that always predicted a repulsive Dead Sea Salt solution into an eagerly wanted beacon or motivational magnet. […]

This instant transformation of motivation, he said, lies in the ability of events to activate particular brain circuitry.

{ EurekAlert | Continue reading }

brain, gross | February 6th, 2013 3:47 pm

The question is, what happens to your ideas about computational architecture when you think of individual neurons not as dutiful slaves or as simple machines but as agents that have to be kept in line and that have to be properly rewarded and that can form coalitions and cabals and organizations and alliances? This vision of the brain as a sort of social arena of politically warring forces seems like sort of an amusing fantasy at first, but is now becoming something that I take more and more seriously, and it’s fed by a lot of different currents.

Evolutionary biologist David Haig has some lovely papers on intrapersonal conflicts where he’s talking about how even at the level of the genetics, even at the level of the conflict between the genes you get from your mother and the genes you get from your father, the so-called madumnal and padumnal genes, those are in opponent relations and if they get out of whack, serious imbalances can happen that show up as particular psychological anomalies.

We’re beginning to come to grips with the idea that your brain is not this well-organized hierarchical control system where everything is in order, a very dramatic vision of bureaucracy. In fact, it’s much more like anarchy with some elements of democracy. Sometimes you can achieve stability and mutual aid and a sort of calm united front, and then everything is hunky-dory, but then it’s always possible for things to get out of whack and for one alliance or another to gain control, and then you get obsessions and delusions and so forth.

You begin to think about the normal well-tempered mind, in effect, the well-organized mind, as an achievement, not as the base state, something that is only achieved when all is going well, but still, in the general realm of humanity, most of us are pretty well put together most of the time. This gives a very different vision of what the architecture is like, and I’m just trying to get my head around how to think about that.

{ Daniel C. Dennett/Edge | Continue reading }

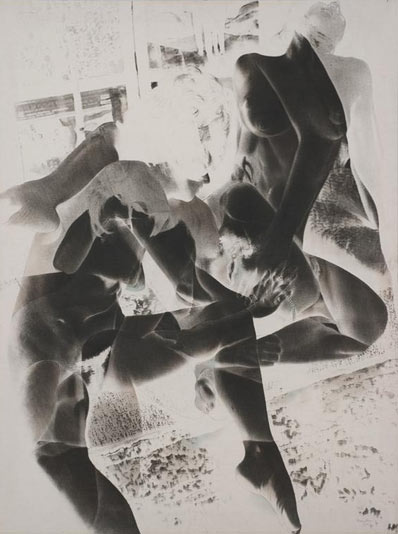

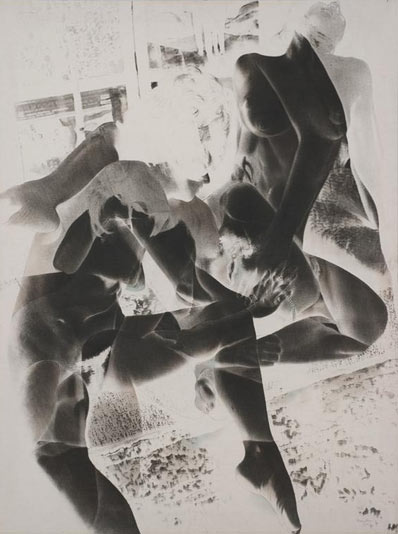

photo { Robert Heinecken }

brain, neurosciences, photogs | January 18th, 2013 9:36 am

The simplicity of modern life is making us more stupid, according to a scientific theory which claims humanity may have reached its intellectual and emotional peak as early as 4,000 BC.

Intelligence and the capacity for abstract thought evolved in our prehistoric ancestors living in Africa between 50,000 and 500,000 years ago, who relied on their wits to build shelters and hunt prey.

But in more civilised times where we no longer need to fight to survive, the selection process which favoured the smartest of our ancestors and weeded out the dullards is no longer in force.

Harmful mutations in our genes which reduce our “higher thinking” ability are therefore passed on through generations and allowed to accumulate, leading to a gradual dwindling of our intelligence as a species, a new study claims. […]

“I would wager that if an average citizen from Athens of 1000 BC were to appear suddenly among us, he or she would be among the brightest and most intellectually alive of our colleagues and companions, with a good memory, a broad range of ideas, and a clear-sighted view of important issues,” he said.

{ Guardian | Continue reading }

update 11/16 { Even if we do grant that cognitive evolutionary pressures have eased since 1000 BC, it’s not clear that this would make us ‘less intelligent.’ }

brain, science | November 14th, 2012 10:39 am

Brains are very costly. Right now, just sitting here, my brain (even though I’m not doing much other than talking) is consuming about 20- 25 percent of my resting metabolic rate. That’s an enormous amount of energy, and to pay for that, I need to eat quite a lot of calories a day, maybe about 600 calories a day, which back in the Paleolithic was quite a difficult amount of energy to acquire. So having a brain of 1,400 cubic centimeters, about the size of my brain, is a fairly recent event and very costly.

The idea then is at what point did our brains become so important that we got the idea that brain size and intelligence really mattered more than our bodies? I contend that the answer was never, and certainly not until the Industrial Revolution.

Why did brains get so big? There are a number of obvious reasons. One of them, of course, is for culture and for cooperation and language and various other means by which we can interact with each other, and certainly those are enormous advantages. If you think about other early humans like Neanderthals, their brains are as large or even larger than the typical brain size of human beings today. Surely those brains are so costly that there would have had to be a strong benefit to outweigh the costs. So cognition and intelligence and language and all of those important tasks that we do must have been very important.

We mustn’t forget that those individuals were also hunter-gatherers. They worked extremely hard every day to get a living. A typical hunter-gatherer has to walk between nine and 15 kilometers a day. A typical female might walk 9 kilometers a day, a typical male hunter-gatherer might walk 15 kilometers a day, and that’s every single day. That’s day-in, day-out, there’s no weekend, there’s no retirement, and you do that for your whole life. It’s about the distance if you walk from Washington, DC to LA every year. That’s how much walking hunter-gatherers did every single year.

{ Daniel Lieberman/Edge | Continue reading }

brain, flashback, science | November 6th, 2012 2:07 pm

Neuroscientists from New York University and the University of California, Irvine have isolated the “when” and “where” of molecular activity that occurs in the formation of short-, intermediate-, and long-term memories. Their findings, which appear in the journal the Proceedings of the National Academy of Sciences, offer new insights into the molecular architecture of memory formation and, with it, a better roadmap for developing therapeutic interventions for related afflictions.

{ NYU | Continue reading }

brain, memory | October 16th, 2012 6:33 am

When humans evolved bigger brains, we became the smartest animal alive and were able to colonise the entire planet. But for our minds to expand, a new theory goes, our cells had to become less willing to commit suicide – and that may have made us more prone to cancer.

When cells become damaged or just aren’t needed, they self-destruct in a process called apoptosis. In developing organisms, apoptosis is just as important as cell growth for generating organs and appendages – it helps “prune” structures to their final form.

By getting rid of malfunctioning cells, apoptosis also prevents cells from growing into tumours. […]

McDonald suggests that humans’ reduced capacity for apoptosis could help explain why our brains are so much bigger, relative to body size, than those of chimpanzees and other animals. When a baby animal starts developing, it quickly grows a great many neurons, and then trims some of them back. Beyond a certain point, no new brain cells are created.

Human fetuses may prune less than other animals, allowing their brains to swell.

{ NewScientist | Continue reading }

brain, health, science, theory | October 15th, 2012 11:53 am

Human beings form their beliefs about reality based on the constant torrent of information our brains receive through our senses. But some information is accepted and incorporated into our beliefs more easily than another one. More specifically, we’re all very eager to update our beliefs based on good news rather than on its bad counterpart. This widespread tendency to be disinclined to integrate negative information into our beliefs is known as the “good news/bad news effect.” […]

A new study by Tali Sharot et al. has shown that this bias towards good news can be decreased through transcranial magnetic stimulation, which involves exposing certain brain regions to a magnetic field.

{ United Academics | Continue reading }

photo { Joel Meyerowitz }

brain | October 12th, 2012 1:25 pm

brain, photogs, science | October 4th, 2012 12:02 pm

The idea that humans walk in circles is no urban myth. This was confirmed by Jan Souman and colleagues in a 2009 study, in which participants walked for hours at night in a German forest and the Tunisian Sahara. […]

Souman’s team rejected past theories, including the idea that people have one leg that’s stronger or longer than the other. If that were true you’d expect people to systematically veer off in the same direction, but their participants varied in their circling direction.

Now a team in France has made a bold attempt to get to the bottom of the mystery. […] [It] suggests that our propensity to walk in circles is related in some way to slight irregularities in the vestibular system. Located in inner ear, the vestibular system guides our balance and minor disturbances here could skew our sense of the direction of “straight ahead” just enough to make us go around in circles.

{ BPS | Continue reading }

photo { Hans Bellmer }

brain, neurosciences, photogs | October 4th, 2012 5:59 am

Researchers at the Fred Hutchinson Cancer Research Center have found traces of male DNA in women’s brains, which seems to come from cells from a baby boy crossing the blood-brain barrier during pregnancy. This is known as microchimerism, and according to the researchers, this is the first description of male microchimerism in the female human brain.

{ Genome Engineering. | Continue reading }

brain, genes | September 28th, 2012 12:01 pm