spy & security

The ads you see online are based on the sites, searches, or Facebook posts that get your interest. Some rebels therefore throw a wrench into the machinery — by demonstrating phony interests.

“Every once in a while, I Google something completely nutty just to mess with their algorithm,” wrote Shaun Breidbart. “You’d be surprised what sort of coupons CVS prints for me on the bottom of my receipt. They are clearly confused about both my age and my gender.”

[…]

“You never want to tell Facebook where you were born and your date of birth. That’s 98 percent of someone stealing your identity! And don’t use a straight-on photo of yourself — like a passport photo, driver’s license, graduation photo — that someone can use on a fake ID.”

[…]

“Create a different email address for every service you use”

[…]

“Oh yeah — and don’t use Facebook.”

{ NY Times | Continue reading }

google, guide, spy & security | October 14th, 2019 6:27 pm

Japanese idol Ena Matsuoka was attacked outside her home last month after a fan figured out her address from selfies she posted on social media — just by zooming in on the reflection on her pupils.

The fan, Hibiki Sato, 26, managed to identify a bus stop and the surrounding scenery from the reflection on Matsuoka’s eyes and matched them to a street using Google Maps.

{ Asia One | Continue reading }

Tokyo Shimbun, a metropolitan daily, which reported on the stalking case, warned readers even casual selfies may show surrounding buildings that will allow people to identify the location of the photos.

It also said people shouldn’t make the V-sign with their hand, which Japanese often do in photos, because fingerprints could be stolen.

{ USA Today | Continue reading }

incidents, spy & security, technology | October 13th, 2019 8:11 am

iBorderCtrl is an AI based lie detector project funded by the European Union’s Horizon 2020. The tool will be used on people crossing borders of some European countries. It officially enables faster border control. It will be tested in Hungary, Greece and Letonia until August 2019 and should then be officially deployed.

The project will analyze facial micro-expressions to detect lies. We really have worries about such a project. For those who don’t have any knowledge on AI and CS, the idea of using a computer to detect lies can sound really good. Computers are believed to be totally objective.

But the AI community knows it is far from being true: biases are nearly omnipresent. We have no idea how the dataset used by iBorderCtrl has been built.

More globally, we have to remind that AI has no understanding of humans (to be honest, it has no understanding at all). It just starts being able to recognize the words we pronounce, but it doesn’t understand their meaning.

Lies rely on complex psychological mechanisms. Detecting them would require a lot more than a simple literal understanding. Trying to detect them using some key facial expressions looks utopian, especially as facial expressions can vary from a culture to another one. As an example, nodding the head usually means “yes” in western world, but it means “no” in countries such as Greece, Bulgaria and Turkey.

{ ActuIA | Continue reading }

The ‘iBorderCtrl’ AI system uses a variety of ‘at home’ pre-registration systems and real time ‘at the airport’ automatic deception detection systems. Some of the critical methods used in automated deception detection are that of micro-expressions. In this opinion article, we argue that considering the state of the psychological sciences current understanding of micro-expressions and their associations with deception, such in vivo testing is naïve and misinformed. We consider the lack of empirical research that supports the use of micro-expressions in the detection of deception and question the current understanding of the validity of specific cues to deception. With such unclear definitive and reliable cues to deception, we question the validity of using artificial intelligence that includes cues to deception, which have no current empirical support.

{ Security Journal | Continue reading }

airports and planes, faces, robots & ai, spy & security | October 6th, 2019 10:44 am

The U.S. government is in the midst of forcing a standoff with China over the global deployment of Huawei’s 5G wireless networks around the world. […] This conflict is perhaps the clearest acknowledgement we’re likely to see that our own government knows how much control of communications networks really matters, and our inability to secure communications on these networks could really hurt us.

{ Cryptography Engineering | Continue reading }

related { Why Controlling 5G Could Mean Controlling the World }

U.S., asia, spy & security, technology | September 30th, 2019 6:25 pm

Paul Hildreth peered at a display of dozens of images from security cameras surveying his Atlanta school district and settled on one showing a woman in a bright yellow shirt walking a hallway.

A mouse click instructed the artificial-intelligence-equipped system to find other images of the woman, and it immediately stitched them into a video narrative of her immediate location, where she had been and where she was going.

There was no threat, but Hildreth’s demonstration showed what’s possible with AI-powered cameras. If a gunman were in one of his schools, the cameras could quickly identify the shooter’s location and movements, allowing police to end the threat as soon as possible, said Hildreth, emergency operations coordinator for Fulton County Schools.

AI is transforming surveillance cameras from passive sentries into active observers that can identify people, suspicious behavior and guns, amassing large amounts of data that help them learn over time to recognize mannerisms, gait and dress. If the cameras have a previously captured image of someone who is banned from a building, the system can immediately alert officials if the person returns.

{ LA Times | Continue reading }

installation sketch { ecstasy, 2018 }

guns, robots & ai, spy & security | September 8th, 2019 1:50 pm

Before you hand over your number, ask yourself: Is it worth the risk? […]

Your phone number may have now become an even stronger identifier than your full name. I recently found this out firsthand when I asked Fyde, a mobile security firm in Palo Alto, Calif., to use my digits to demonstrate the potential risks of sharing a phone number.

He quickly plugged my cellphone number into a public records directory. Soon, he had a full dossier on me — including my name and birth date, my address, the property taxes I pay and the names of members of my family.

From there, it could have easily gotten worse. Mr. Tezisci could have used that information to try to answer security questions to break into my online accounts. Or he could have targeted my family and me with sophisticated phishing attacks.

{ NY Times | Continue reading }

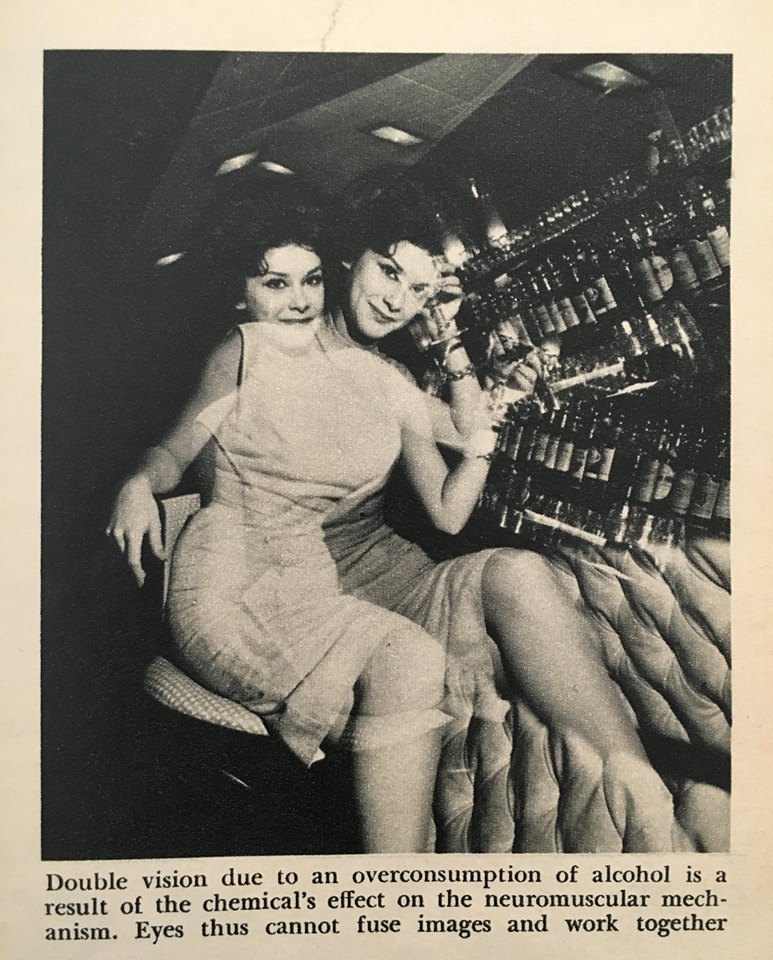

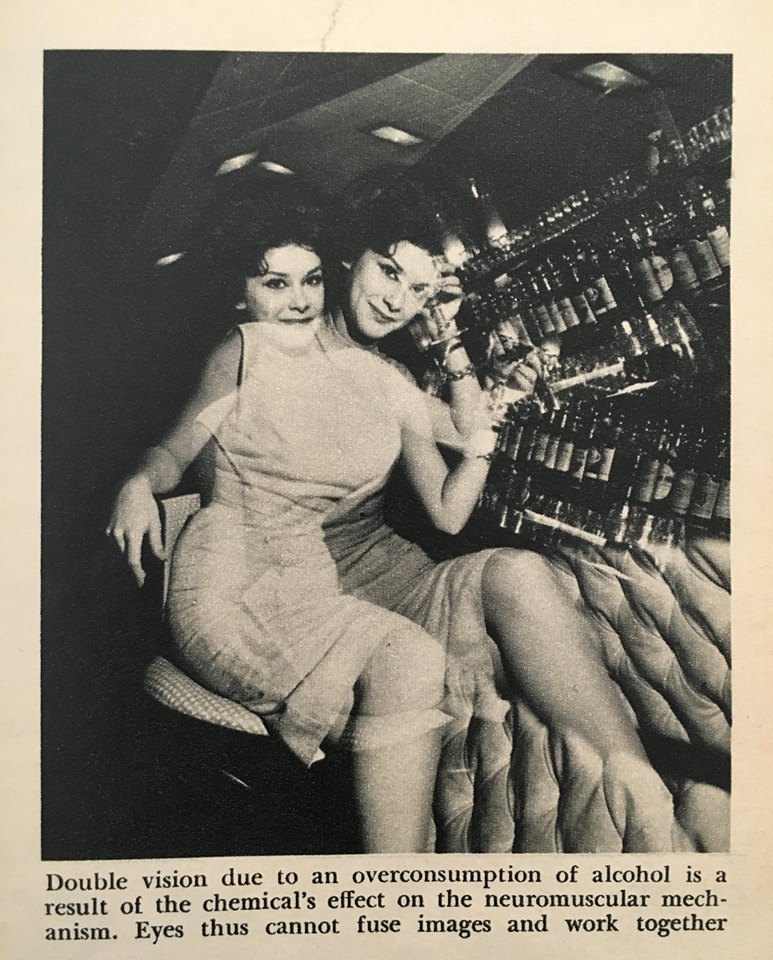

image { Bell telephone magazine, March/April 1971 }

guide, spy & security, technology | August 15th, 2019 9:38 am

Behavioural patterns of Londoners going about their daily business are being tracked and recorded an unprecedented scale, internet expert Ben Green warns. […]

Large-scale London data-collection projects include on-street free Wi-Fi beamed from special kiosks, smart bins, police facial recognition and soon 5G transmitters embedded in lamp posts.

Transport for London announced this week they would track, collect and analyse movements of commuters around 260 Tube stations starting from July by using mobile Wi-Fi data and device MAC addresses to help improve journeys. Customers can opt out by turning off their Wi-Fi.

{ Standard | Continue reading }

previously { The Business of Selling Your Location }

art { Poster for Autechre by the Designers Republic, 2016 }

spy & security, technology | May 31st, 2019 5:35 am

In Shenzhen, the local subway operator is testing various advanced technologies backed by the ultra-fast 5G network, including facial-recognition ticketing.

At the Futian station, instead of presenting a ticket or scanning a QR bar code on their smartphones, commuters can scan their faces on a tablet-sized screen mounted on the entrance gate and have the fare automatically deducted from their linked accounts. […]

Consumers can already pay for fried chicken at KFC in China with its “Smile to Pay” facial recognition system, first introduced at an outlet in Hangzhou in January 2017. […]

Chinese cities are among the most digitally savvy and cashless in the world, with about 583 million people using their smartphones to make payment in China last year, according to the China Internet Network Information Center. Nearly 68 per cent of China’s internet users used a mobile wallet for their offline payments.

{ South China Morning Post | Continue reading }

photo { The Collection of the Australian National Maritime Museum }

asia, faces, robots & ai, spy & security | May 27th, 2019 7:42 am

Products developed by companies such as Activtrak allow employers to track which websites staff visit, how long they spend on sites deemed “unproductive” and set alarms triggered by content considered dangerous. […]

To quantify productivity, “profiles” of employee behaviour — which can be as granular as mapping an individual’s daily activity — are generated from “vast” amounts of data. […]

If combined with personal details, such as someone’s age and sex, the data could allow employers to develop a nuanced picture of ideal employees, choose whom they considered most useful and help with promotion and firing decisions. […]

Some technology, including Teramind’s and Activtrak’s, permits employers to take periodic computer screenshots or screen-videos — either with employees’ knowledge or in “stealth” mode — and use AI to assess what it captures.

Depending on the employer’s settings, screenshot analysis can alert them to things like violent content or time spent on LinkedIn job adverts.

But screenshots could also include the details of private messages, social media activity or credit card details in ecommerce checkouts, which would then all be saved to the employer’s database. […]

Meanwhile, smart assistants, such as Amazon’s Alexa for Business, are being introduced into workplaces, but it is unclear how much of office life the devices might record, or what records employers might be able to access.

{ Financial Times | Continue reading }

Google uses Gmail to track a history of things you buy. […] Google says it doesn’t use this information to sell you ads.

{ CNBC | Continue reading }

unrelated { Navy Seal’s lawyers received emails embedded with tracking software }

photo { Philip-Lorca diCorcia, Paris, 1996 }

photogs, spy & security, technology | May 21st, 2019 11:36 am

[I]nside of a Google server or a Facebook server is a little voodoo doll, avatar-like version of you […] All I have to do is simulate what conversation the voodoo doll is having, and I know the conversation you just had without having to listen to the microphone.

{ Quartz | Continue reading }

…a phenomenon privacy advocates have long referred to as the “if you build it, they will come” principle — anytime a technology company creates a system that could be used in surveillance, law enforcement inevitably comes knocking. Sensorvault, according to Google employees, includes detailed location records involving at least hundreds of millions of devices worldwide and dating back nearly a decade.

The new orders, sometimes called “geofence” warrants, specify an area and a time period, and Google gathers information from Sensorvault about the devices that were there. It labels them with anonymous ID numbers, and detectives look at locations and movement patterns to see if any appear relevant to the crime. Once they narrow the field to a few devices they think belong to suspects or witnesses, Google reveals the users’ names and other information. […]

Google uses the data to power advertising tailored to a person’s location, part of a more than $20 billion market for location-based ads last year.

{ NY Times | Continue reading }

robots & ai, spy & security, technology | May 5th, 2019 11:22 am

We’ve all been making some big choices, consciously or not, as advancing technology has transformed the real and virtual worlds. That phone in your pocket, the surveillance camera on the corner: You’ve traded away a bit of anonymity, of autonomy, for the usefulness of one, the protection of the other.

Many of these trade-offs were clearly worthwhile. But now the stakes are rising and the choices are growing more fraught. Is it O.K., for example, for an insurance company to ask you to wear a tracker to monitor whether you’re getting enough exercise, and set your rates accordingly? Would it concern you if police detectives felt free to collect your DNA from a discarded coffee cup, and to share your genetic code? What if your employer demanded access to all your digital activity, so that it could run that data through an algorithm to judge whether you’re trustworthy?

These sorts of things are already happening in the United States.

{ NY Times | Continue reading }

spy & security, technology | April 22nd, 2019 6:58 am

“Connecting” online once referred to ways of communicating; now it is understood as a means of digital totalization, typically euphemized as objects becoming “smart.” Each data-collecting object requires a further smartening of more objects, so that the data collected can be made more useful and lucrative, can be properly contextualized within the operation of other objects. You can’t opt in or out of this kind of connectedness.

{ Rob Horning/Real Life | Continue reading }

spy & security, technology | January 20th, 2019 10:21 am

The ATM-busting technique, known as jackpotting, has been around for almost a decade […] ATM jackpotting is both riskier and more complicated than card-skimming. For starters, scammers have to hack into the computer that governs the cash dispenser, which usually involves physically breaking into the machine itself; once they’re in, they install malware that tells the ATM to release all of its cash, just like a jackpot at a slot machine. These obstacles mean the process takes quite a bit longer than installing a card skimmer, which means more time in front of the ATM’s security cameras and jackpotters triggering an alarm in the bank’s control center at every step. But as chip-and-PIN becomes the standard in the U.S., would-be ATM thieves are running out of other options. […]

It was the Secret Service’s financial crimes division that spotted the series of attacks on multiple locations of the same bank in Florida in December and January, and put out a bulletin to financial institutions, law enforcement, and the public about the new style of ATM theft. The two major global ATM manufacturers, Diebold Nixdorfand NCR, also alerted the public and issued security patches within a few days. Banks started monitoring their ATMs around the clock. Less than 24 hours after the Secret Service’s public alert, Citizens Financial Group, a regional bank with branches all over the northeast, notified the local police that its security folks noticed one of its ATMs go off line. The police contacted the Secret Service, which made its first arrest on the scene.

{ Bloomberg | Continue reading }

photo { Jerome Liebling, Union Square, New York City, 1948 }

economics, scams and heists, spy & security | July 9th, 2018 5:19 am

Ross McNutt is an Air Force Academy graduate, physicist, and MIT-trained astronautical engineer who in 2004 founded the Air Force’s Center for Rapid Product Development. The Pentagon asked him if he could develop something to figure out who was planting the roadside bombs that were killing and maiming American soldiers in Iraq. In 2006 he gave the military Angel Fire, a wide-area, live-feed surveillance system that could cast an unblinking eye on an entire city.

The system was built around an assembly of four to six commercially available industrial imaging cameras, synchronized and positioned at different angles, then attached to the bottom of a plane. As the plane flew, computers stabilized the images from the cameras, stitched them together and transmitted them to the ground at a rate of one per second. This produced a searchable, constantly updating photographic map that was stored on hard drives. His elevator pitch was irresistible: “Imagine Google Earth with TiVo capability.” […]

If a roadside bomb exploded while the camera was in the air, analysts could zoom in to the exact location of the explosion and rewind to the moment of detonation. Keeping their eyes on that spot, they could further rewind the footage to see a vehicle, for example, that had stopped at that location to plant the bomb. Then they could backtrack to see where the vehicle had come from, marking all of the addresses it had visited. They also could fast-forward to see where the driver went after planting the bomb—perhaps a residence, or a rebel hideout, or a stash house of explosives. More than merely identifying an enemy, the technology could identify an enemy network. […]

McNutt retired from the military in 2007 and modified the technology for commercial development. […] His first customer was José Reyes Ferriz, the mayor of Ciudad Juárez, in northern Mexico. In 2009 a war between the Sinaloa and Juárez drug cartels had turned his border town into the most deadly city on earth. […]

Within the first hour of operations, his cameras witnessed two murders. “A 9-millimeter casing was all the evidence they’d had,” McNutt says. By tracking the assailants’ vehicles, McNutt’s small team of analysts helped police identify the headquarters of a cartel kill squad and pinpoint a separate cartel building where the murderers got paid for the hit.

The technology led to dozens of arrests and confessions, McNutt says, but within a few months the city ran out of money to continue paying for the service.

{ Bloomberg | Continue reading | Radiolab }

photo { William Eggleston, Untitled (Two Girls Walking), 1970-73 }

spy & security, technology | December 17th, 2017 5:16 pm

The Food and Drug Administration has approved the first digital pill for the US which tracks if patients have taken their medication. The pill called Abilify MyCite, is fitted with a tiny ingestible sensor that communicates with a patch worn by the patient — the patch then transmits medication data to a smartphone app which the patient can voluntarily upload to a database for their doctor and other authorized persons to see. Abilify is a drug that treats schizophrenia, bipolar disorder, and is an add-on treatment for depression.

{ The Verge | Continue reading }

photo { Bruce Davidson, Subway platform in Brooklyn, 1980 }

health, photogs, spy & security, technology | November 20th, 2017 3:27 pm

In what appears to be the first successful hack of a software program using DNA, researchers say malware they incorporated into a genetic molecule allowed them to take control of a computer used to analyze it. […]

To carry out the hack, researchers encoded malicious software in a short stretch of DNA they purchased online. They then used it to gain “full control” over a computer that tried to process the genetic data after it was read by a DNA sequencing machine.

The researchers warn that hackers could one day use faked blood or spit samples to gain access to university computers, steal information from police forensics labs, or infect genome files shared by scientists.

{ Technology Review | Continue reading }

genes, spy & security, technology | August 10th, 2017 4:19 pm

When the National Security Agency began using a new hacking tool called EternalBlue, those entrusted with deploying it marveled at both its uncommon power and the widespread havoc it could wreak if it ever got loose.

Some officials even discussed whether the flaw was so dangerous they should reveal it to Microsoft, the company whose software the government was exploiting, according to former NSA employees who spoke on the condition of anonymity given the sensitivity of the issue.

But for more than five years, the NSA kept using it — through a time period that has seen several serious security breaches — and now the officials’ worst fears have been realized. The malicious code at the heart of the WannaCry virus that hit computer systems globally late last week was apparently stolen from the NSA, repackaged by cybercriminals and unleashed on the world for a cyberattack that now ranks as among the most disruptive in history.

{ Washington Post | Continue reading }

screenshot { Ben Thorp Brown, Drowned World, 2016 }

spy & security | May 17th, 2017 5:06 pm

The travel booking systems used by millions of people every day are woefully insecure and lack modern authentication methods. This allows attackers to easily modify other people’s reservations, cancel their flights and even use the refunds to book tickets for themselves.

{ Computer World | Continue reading }

related { By posting a picture of your boarding pass online, you may be giving away more information than you think }

airports and planes, spy & security, technology | January 3rd, 2017 11:22 am

Someone Is Learning How to Take Down the Internet

Recently, some of the major companies that provide the basic infrastructure that makes the Internet work have seen an increase in DDoS attacks against them. Moreover, they have seen a certain profile of attacks. These attacks are significantly larger than the ones they’re used to seeing. They last longer. They’re more sophisticated. And they look like probing. One week, the attack would start at a particular level of attack and slowly ramp up before stopping. The next week, it would start at that higher point and continue. And so on, along those lines, as if the attacker were looking for the exact point of failure. […]

We don’t know where the attacks come from. The data I see suggests China, an assessment shared by the people I spoke with. On the other hand, it’s possible to disguise the country of origin for these sorts of attacks. The NSA, which has more surveillance in the Internet backbone than everyone else combined, probably has a better idea, but unless the US decides to make an international incident over this, we won’t see any attribution.

{ Bruce Schneier | Continue reading }

polaroid photograph { Andy Warhol, Grapes, 1981 }

spy & security, technology | September 14th, 2016 1:53 pm

The June 5 escape from Clinton was planned and executed by two particularly cunning and resourceful inmates, abetted by the willful, criminal conduct of a civilian employee of the prison’s tailor shops and assisted by the reckless actions of a veteran correction officer. The escape could not have occurred, however, except for longstanding breakdowns in basic security functions at Clinton and DOCCS executive management’s failure to identify and correct these deficiencies.

[…]

Using pipes as hand- and foot-holds, Sweat and Matt descended three tiers through a narrow space behind their cells to the prison’s subterranean level. There they navigated a labyrinth of dimly lit tunnels and squeezed through a series of openings in walls and a steam pipe along a route they had prepared over the previous three months. When, at midnight, they emerged from a manhole onto a Village of Dannemora street a block outside the prison wall, Sweat and Matt had accomplished a remarkable feat: the first escape from the high-security section of Clinton in more than 100 years.

[…]

In early 2015, the relationships deepened and Mitchell became an even more active participant in the escape plot, ultimately agreeing to join Sweat and Matt after their breakout and drive away with them. In addition to smuggling escape tools and maps, Mitchell agreed to be a conduit to obtain cash for Matt and gathered items to assist their flight, including guns and ammunition, camping gear, clothing, and a compass. Even as she professed her love for Sweat in notes she secretly sent him, Mitchell engaged in numerous sexual encounters with Matt in the tailor shop. These included kissing, genital fondling, and oral sex.

[…]

The Inspector General is compelled to note that this investigation was made more difficult by a lack of full cooperation on the part of a number of Clinton staff, including executive management, civilian employees, and uniformed officers. Notwithstanding the unprecedented granting of immunity from criminal prosecution for most uniformed officers, employees provided testimony under oath that was incomplete and at times not credible. Among other claims, they testified they could not recall such information as the names of colleagues with whom they regularly worked, supervisors, or staff who had trained them. Several officers, testifying under oath within several weeks of the event, claimed not to remember their activities or observations on the night of the escape. Other employees claimed ignorance of security lapses that were longstanding and widely known.

{ State of New York, Office of the Inspector General | Continue reading }

photo { Chisels, punch, hacksaw blade pieces, and unused drill bits left by Sweat in tunnel }

crime, new york, spy & security | June 7th, 2016 12:31 pm